Welcome back everyone 👋 and a heartfelt thank you to all new subscribers that joined in the past week!

This is the 44th issue of the Gorilla Newsletter - a weekly online publication that sums up everything noteworthy from the past week in generative art, creative coding, tech and AI - as well as a sprinkle of my own endeavors.

That said, hope you're all having a great start into the week! Let's get straight into it! 👇

All the Generative Things

Lauren Lee McCarthy on Software Values

Yet again, Monk blesses our timelines with a stellar interview, this time with none other than Lauren Lee McCarthy. Having created P5 back in 2013, and having been part of the board of directors for the Processing Foundation from 2015 till 2021, she's done tremendous efforts in creating a more inclusive and accessible community for those that have not historically had the privilege to learn code and tech literacy.

More than that, she is also an accomplished artist that creates profound conceptual work. In their conversation they address her most recent project 'Seeing Voices' that's releasing with Bright Moments as part of the Paris AI collection. The artworks are a curated set of collages, in which a system combines AI generated messages with generative compositions consisting of drawings by McCarthy.

The generated messages are an echo from her previous work 'Voices in my head' that she collaborated on with Kyle McDonald. Both pieces are about attributing meaning to software, asking questions about control and how software can influence and change us. McCarthy states that software is not a neutral product; that it inherits the ideas, biases and values of its creators.

I believe that this is an incredibly important topic that isn't talked about enough. We engage with software on a daily basis, what effect does it have on us? How are we collectively changing through our interactions with it?

And if that's not enough Le Random for you, there's another interview with Linda Dounia, that I just haven't had the time to dig into yet:

Introduction to size-coding by Mathieu Henri

In last week's newsletter I mentioned Lovebyte 2024, a yearly online celebration of everything related to sizecoding and the demoscene. Although the 2024 edition of the event is over now, there's still a couple of cool things that I missed and that are very worth sharing still.

For instance, the closing ceremony that took place the same day I published the previous issue, in which all of the winning submissions were presented. The event concluded with a whopping 351 submissions from participants all around the world:

Another really cool recording that came from the event, is this awesome video seminar by Mathieu Henri who's been part of the demoscene since the very start, and still highly involved. In the video he introduces us to sizecoding in JavaScript and also tells us a little bit about the history of the demoscene:

Mathieu starts us off with some of the features that make Javascript a great language for sizecoding - pointing out its leniency and some of the shenanigans that allow us to shave off quite a few bytes from our code. He proceeds to give a recount about the early days of the demoscene, showcasing some of the very first competitions that were held in that context and pointing out the competition websites - that somehow still work today - where the participants' submissions were documented, such as Web4096 held in 1999, as well as the impossible 256B challenge in 2002, where you had to somehow make a sketch in only 256 bytes.

Mathieu actually documented his participation in some of these events over on his portfolio and even provides us with the code that he submitted back then, alongside modern equivalents - I was really blown away by his entry to the 256B challenge in which he made a Metaball sketch in just 256 bytes:

Patt Vira on the Persistence of Vision

I've become a huge fan of Patt Vira's content over on YouTube; her code-along tutorials are very polished and easy to follow - her video on marching squares got me back into exploring the algorithm in more depth for myself after Genuary concluded, and unlocked a lot of new ideas that I wouldn't have otherwise had.

Patt has a knack for exploring interesting ideas. Here's one that I highly enjoyed, in which she explores the concept of 'Persistence of Vision' where a drawn cube only appears within a noisy grid of pixels when it is in motion:

Persistence of Vision is a phenomenon that occurs when the visual perception of an object remains for a few brief moments after the fact that it has actually vanished from our vision. A good example of this are lightning strikes at night-time, you can often still see their outline for an instant after they've struck. On the Wikipedia page there's a nice excerpt from Leonardo da Vinci's notebook on this:

This effect seemingly also works great in the setting of a noisy grid of pixels. Patt was inspired by a recent video from Chris Long, in which he goes over how he first discovered that effect back in the eighties, while experimenting with line intersections on his home computer - noticing that the apparent shapes could only be made out while they were in motion:

The comment section to the video is also highly interesting with other chiming in with their thoughts on the effect. Go and show both of their channels some love and leave them a sub and a thumbs up! Definitely recommend keeping up with what Patt's doing - I have a hunch that we're going to see a lot more stellar content from her in the coming months.

Tezumie's AIJS Editor Updates

Tezumie has been hard at work and consistently adding new features to his AI powered code editor - it's been really exciting seeing him turn his initial idea into more and more of a complete platform. Now it even boasts profile pages for users, the ability to save sketches, as well as an explore page and many more cool and convenient things. Tezumie frequently posts updates about it in the AIJS discord:

One update that I've been super excited about and have to yet take for a spin is the custom embeds, I mentioned it to Tezumie a couple of weeks ago, asking about it and explaining why I don't usually use the P5 editor's or OpenProcessing's embeds. It's mainly due to them slowing down the initial page load since they have to load in additional script files - if you have multiple embeds on a single page (a tutorial for instance) they don't share those resources, but rather, each individual embed needs to load in its own assets:

Checking out the docs for AIJS it's always a breath of fresh air when effort is put into that aspect of a software - I thought that it was a really nice touch to also support Farcaster frames as a potential embed:

I think what's really cool about AIJS, is that it's made by someone that is a creative coder and generative artist themself - building the relevant features that are useful for that specific workflow. Openprocessing, you better watch out, Tezumie's coming for you!

Web3 News

A Web3 Travel Guide for Paris

Next week is going to be big with everyone gearing up for the annual NFT Paris show - if you're visiting, you might also want to stop by some of the other spots in Paris that hold significance in the Web3 space. Ana Maria wrote a Web3 travel guide for the occasion over on Forbes, pointing out the important galleries in the area with some of their upcoming shows:

Btw, if you end taking any cool pics or see any cool generative art and Web3 related things, send'em over, would love to include them in the next issue of the newsletter!

Vetro Editions at Galerie Data

If you end up finding yourself in Paris tomorrow (20th of February), you'll have to stop by Galerie Data, they're hosting our favorite genart publishing house Vetro Editions, celebrating the launch of four of their books alongside some of the artists featured in those books:

Their roster includes the second issue of Matt DesLaurier's Meridian art book, Julien Gachadoat's "Pathways" that recently came out, "Tracing the Line" that recounts the plotter work of many pioneering and contemporary generative artists, as well an entirely new addition, that just came out this past week for RCS:

RCS also published a complementary announcement post over on their site, in which Carla Gannis from the RCS team recounts the motivations behind starting the site, their position in amplifying the voices of the crypto art scene as well as a brief overview of what's happened in recent years. I believe that the book features the 250 articles that have been published on RCS over the course of the past 2 years, and likely more material. If you're interested in getting the book, you can learn a bit more about it here:

Just to disclose, I'm not sponsored by any of the mentioned parties - I simply think that Vetro Editions makes really awesome books, and all of these are very worth having in your genart book collection.

The Confusing 2024 Crypto-Art Meta

Although 2024 is shaping up to be a year with many new and exciting Web3 developments, and while there's many efforts being made into making things more interoperable, it also seems that things are becoming more and more fragmented - I'm personally having a really hard time to keep up with everything, and I believe I'm not the only one in this.

Sgt_slaughtermellon wrote an interesting think piece about the current state of crypto art, providing us with the bigger picture of what's happening with the different marketplaces and chains, how to adapt as an artist, and that it's all just a bit too chaotic:

Anna Lucia's Spellbound

One other thing that I wanted to share here is Anna Lucia's newest project over on fxhash, a redeemable collaboration with the German fashion lab Phoebe Heess. Conceptually, the artwork is fascinating: via fxhash's code driven parameters, collectors can record their voice or upload an audio file, where the waveform of that audio then determines the pattern visualized on the scarf:

Being the first manufactured redeemable on fxhash makes this one a very special digital token to collect.

Tech and AI

Mario Kart 3 in the Browser

A fan of Mario Kart? Well you can now play it in your browser here, and you can even build your own version of it using Alex Lunakepio's recently open sourced project:

Here's a link to the github repo, which has amassed a whopping 1.2k stars in just a couple of days - there isn't too much to say here, simply that it's a really cool project:

10 Minecraft Facts by Alan Zucconi

Minecraft has quite a long history, and was very popular when it first came out in 2009, but slowly fizzling away over the 2010s. The game then saw a massive boom in 2021 when PewDiePie returned to the game, popularizing it again and allowing it to have somewhat of a renaissance. And it seems to have retained its popularity even in 2024, with over half a million daily players.

I'm bringing all of this up because Minecraft is also interesting from a computational point of view, recently came across a re-tweet from Alan Zucconi, a thread that he'd posted back in 2022 enumerating 10 interesting facts about the game, mainly revolving around its world generating system, that I found highly interesting:

If you don't know Alan Zucconi, he's a bit of a super star in the game dev scene, having created an impressive number of tutorials on his blog - if you're getting into game dev things you need to check it out:

OpenAI's text to video model Sora

It's been a minute since I've been genuinely impressed by AI, last time I was floored was when I took DALL-E3 for a spin (which is freely accessible via the Bing browser). OpenAI's new Sora model seems to take things to the next level by generating coherent and realistic high quality videos given an input prompt. OpenAI's already shared a number of videos in their announcement page showcasing some of the strengths and weaknesses of the new model:

The videos aren't perfect - it's not so difficult to determine that they're still clearly AI generated with specific giveaway details - for instance, it doesn't really know too much about the physics of the real world. One of the generated videos that's been making the rounds features a team of archeologists where they dig up a plastic chair - the chair materializes from the sand and floats around the scene almost like it isn't a part of it:

The chair was most definitely an unpaid actor in this scene. Another thing that immediately jumped out to me was how eerily synchronous motion sometimes is in the generated videos, like the manner in which pedestrians in the background walk or the waves crashing onto the cliffs featured in some of the other videos. It all feels a little surreal. But on the other hand it seems that we're incredibly close to what might just alter the entire movie, VFX and video game industry - not only can the model generate video based on input prompts but it can also do so from input images and videos. Here's a link to a more technical write-up from the OpenAI team:

AlphaCode2: Gemini for Competitive Programming

Google recently replaced their previous AI powered chat partner Bard with a more powerful LLM called Gemini:

And it seems that there are quite a few differences, with Gemini leveraging a more modern model architecture similar to the other chat-bots out there, multi-modality being an important feature to have for it to compete. What's more is that they built another model on top of Gemini that's specifically devised for solving programming problems - even at the competitive level - called AlphaCode2. They announced it in the following video:

The cool thing about AlphaCode2 is that it not only provides an answer but also the reasoning and how it approaches the given problems:

As of now it is not a public model and is still kept secret, so we'll have to take their word for it - looking into the previous AlphaCode model I also couldn't really find any concrete pointers to where it can be taken for a test run, or if it has been open sourced somewhere except for this demo. They do however have an interesting writeup on the model back from 2022:

Gorilla Updates

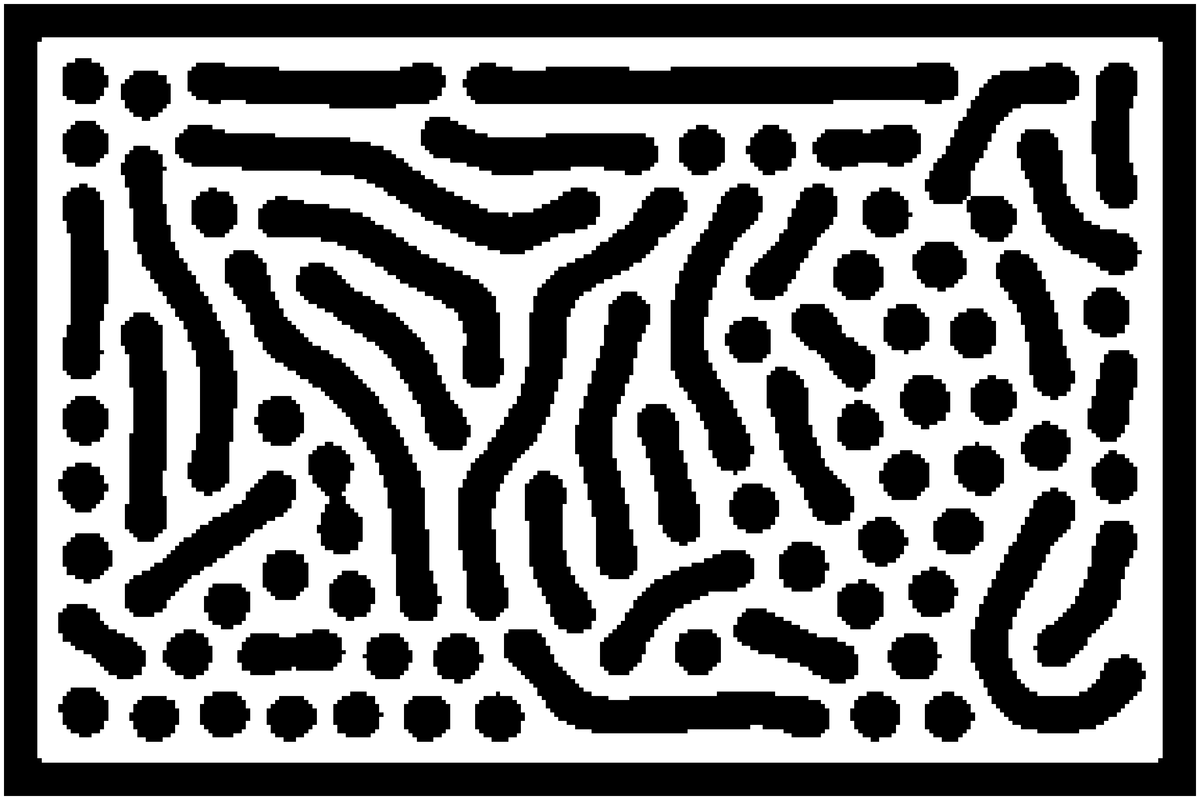

What have I been up to this week? I resumed my exploration of marching squares - extending the code to also become able of finding the contours of arbitrary polygonal shapes. Here's what this looks like:

I think there's already some interesting possibilities here that are worth exploring more in depth in the future. The trick to do this is by using raycasting to check if grid vertices fall under the areas of the polygonal shapes, setting them to active if they do, such that the marching squares algo can subsequently find the contours. This can be extended with a point-line distance function to determine how close the grid vertices are to the polygonal region, and setting them to an active value based on proximity - which leads to this nice rounded off look of the contours that I thought looked really great.

This all started after browsing Pinterest for inspiration, a platform I've grown to love more and more over the past year. I found an interesting artwork that immediately drew me in:

I couldn't find the artist behind the drawing (image on the left, if you know that artist let me know), but I thought that it was brilliant and gave me many ideas - I immediately had to ask myself what it would look like in generative form. The first step would be to figure out how to create the shape of a puzzle tile algorithmically. And it turned out to be trickier than I initially assumed.

Whenever you're trying to make any shape that's a bit more complicated than a simple circle or a convex regular n-gon you run into all sorts of problems. Although marching squares is a viable approach, it also comes with a lot of problems. For instance, the smoothness of the final 'rendered' shape entirely depends on the resolution of the underlying grid that's used to trace the contours - and the higher that resolution of that grid, the longer it takes for the shape to render.

The code ended up being an absolute mess when I finally managed to make it produce a the shape of a puzzle tile, which can be done by lining up 4 circles at the edges of a square and then letting the contour algo do its magic. Inward dents are achieved by setting the circles as "negative" and making them toggle off the grid vertices. One thing that I really wanted was the round and organic feel that many puzzle tiles have, which was difficult to do with how the vertices of the final shape were distributed along its contour.

After spending nearly three days on this exploration, I ultimately came to the conclusion that marching squares was not the right approach for this task - I probably could have spent some time optimizing my code but I just wanted to get the idea to work and see what it looked like. I ended up using the polybool.js library that allows the compositing of geometric shapes via boolean operations, a topic that I'm likely going to talk about more at some point. Here's what I ended up with:

Happy with where it's at now, but this is still not the final form of it.

Music for Coding

The opening to Evangelion is an absolute masterpiece; arguably one of my favorite songs of all time - I must've listened to a few dozen different versions of it over the past years, and I recently had a craving to listen to it again. One of the results that popped up in YouTube search was this a Capella version by Ignite Vocal, and I was instantly floored - what an absolute banger:

The group's simply stellar, there isn't a single thing to dislike about their rendition. Their other stuff is great as well. I'm surprised that they only have 619 subscribers on their channel (at the time of writing this) - I think they deserve at least a hundred times that number.

And that's it from me this week, while I'm typing out these last words I bid my farewells — hopefully this caught you up a little with the events in the world of generative art, tech and AI throughout the past week.

If you enjoyed it, consider sharing it with your friends, followers and family - more eyeballs/interactions/engagement helps tremendously - it lets the algorithm know that this is good content. If you've read this far, thanks a million!

If you're still hungry for more Generative art things, you can check out the most recent issue of the Newsletter here:

Previous issues of the newsletter can be found here:

Cheers, happy sketching, and again, hope that you have a fantastic week! See you in the next one - Gorilla Sun 🌸 If you're interested in sponsoring the Newsletter or advertising - reach out!