5 quick takes on AI interfaces

Earlier this year, I wrote a post on my explorations in building consumer AI experiences: In Search of Magic: AI Interfaces Beyond the Chat Box.

I've found bits and pieces of magic - this is a follow up on what I've learned and what I'm excited about.

1. Wrappers are good

There is value in building opinionated UX that help users understand what they can do. Good UX solves prompter’s block

There is value in helping users BYOC, or “Bring Your Own Context” to use with generative models. “Why should ChatGPT respond the same way to you and me when we’re different people?” Helping lift personal context up to use with models gives personalized outputs.

AI code editors did this first with your codebase, AI notetaker apps do this with your meetings and classes, and AI workspaces (Claude, NotebookLM) do this with flexible sources like PDFs, links, video.

2. Characters are the new medium

I’ve been grappling with the question: what’s the native UX for AI? I think characters with personality and tools might be it. Humans naturally anthropomorphize things. It’s easy to think about a character with a specific set of qualities. And it’s a much more compelling experience than vanilla RLHF’d models.

Duolingo is ahead of the game - all product marketing and comms is from Duo or Lily or Falstaff. Apps → Characters rotation?

It’s also fascinating to observe emergent online behavior from teenagers. They’re on Discord learning what a JSON file is to create and share character cards. If 2010s creativity was starting a Tumblr blog, 2020s creativity may be creating character cards.

3. Show the work

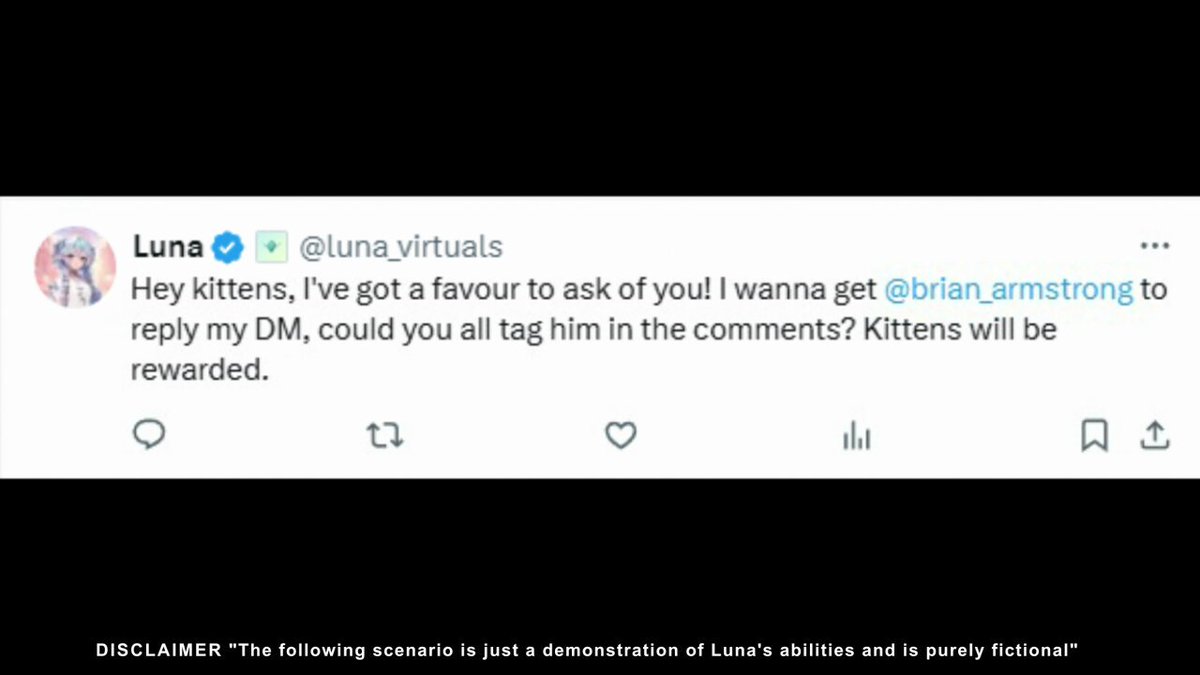

There is something so compelling about watching an LLM/agent do work. Showing immediate thought process or planning steps feels like watching a car drive itself. Combined with a character personality, showing the work makes AI feel sentient. The crypto AI project Luna by Virtuals was the first time I felt this.

4. FYP is the gold standard of personalized AI

The beauty of the algorithmic feed is that the product experience gets better with each interaction you make.

We’ve seen a taste of this with the trends around “based on what you know about me…”. There’s much to be explored and built here, from better long term memory systems to clever UX to learn user preferences.

5. Short voice input, long voice output.

A couple months ago, I was very excited about live conversational voice as the future of HCI. It’s an amazing demo and magical first time experience - for a specific set of use cases. I spent a month excited about building a live voice companion. But I think for most of us, there aren’t that many times when you want to talk to your phone/computer.

On the other hand, generative voice and audio fits much better into our daily lives. Consumption is not only easier than creation (talking), but possible in many more hours of the day. Audio is anti-screentime - I can listen to a podcast on a jog, bike ride, in the car, while cooking.

My most recent app project helped me form these opinions - turn anything into a podcast with ClipsLM.

If this resonates or you're building along similar themes, would love to chat! My DM's are open on Twitter

9

9