Contextual Writing

Language model feedback loops

The following text was initially created in partnership with the Goethe Institut in August 2021 as part of a series focusing on the cultural and social impacts of artificial intelligence. I decided to introduce artificial intelligence in an unconventional manner to the readers: the article was co-authored with an artificial neural network. At that time, the system's model (referred to as GPT-3) had reached an advanced stage, capable of producing coherent, human-readable short and medium-sized texts with syntax similar to what it had been trained on. During the creative process, the network contributed to and completed my thoughts, leading the narrative through a series of feedback loops between its recommendations and my reflections. The sentences' structure and spelling remain unchanged from the interactive text generation sessions, with minor edits made for better readability. To distinguish between my input text and the transformer's completions, my prompts are in regular, bold font, while the transformer's responses are in italic font, like the text you're currently reading. Before delving into the original text, let me provide some

At the time of writing the original text, these large language models were not available to the open public. Interested artists, researchers and writers could use different entry points to work with these networks. It was clear already that the technology is heading into two different directions: open source, self tuned models that are trained locally or using shared computers (I was using GPTNeo with a few projects), and close sourced, central models, accessed in ways of software as a service (like the OpenAI's ChatGPT, Google's Bard, etc). For this particular piece I was using OpenAI's GPT3 DaVinci Model which is being discontinued and shut down as of 2024 January 4.

At the time I wrote the original text, I had prior experience working with deterministic artificial tools such as programming languages, Markov chains, and other formalized systems. Therefore, I was genuinely enthusiastic about the prospect of working with a probabilistic system—one that is contextually aware and capable of surprising me with uncertain paths, even if those arose from errors or unforeseen factors such as machine hallucinations. In contrast to typical errors in code, where a function may fail to run when called, large language models continue to operate even if they lack the necessary capabilities to provide a meaningful response to an instruction. Such circumstances often result in fascinating consequences at the semantic level. Several of the subsequent concepts and topics emerged through loosely controlled feedback loops between myself and the model.

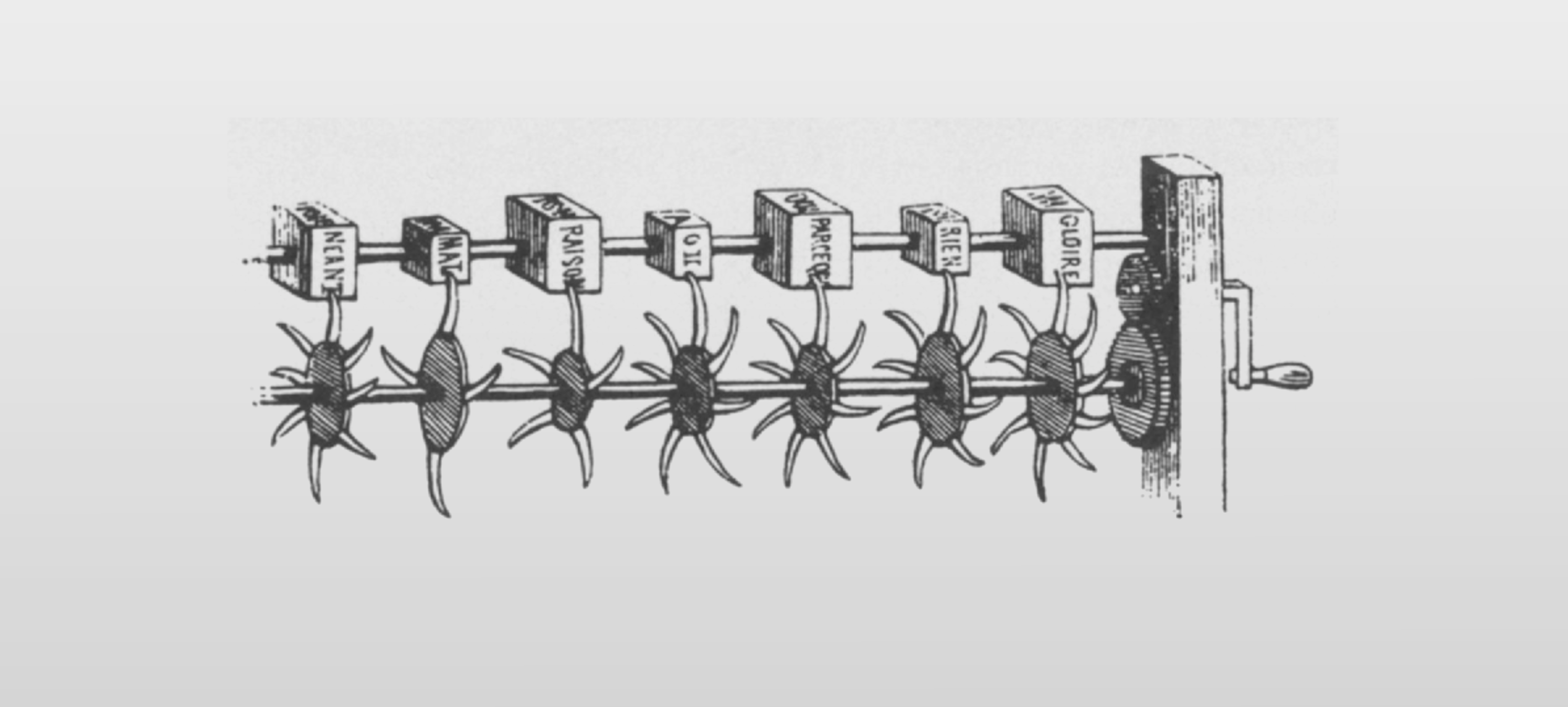

Another interesting aspect of early LLMs (like DaVinci) is that they are much special in spontaneous creativity, since they were not affected by reinforcement learning from human feedback (RLHF), which is a more recent, widely used method that combines reinforcement learning (RL) with human feedback to train models to generate outputs that are both informative and aligned with human values. The first published models and their responses were so otherworldly and sometimes magic in their nature, that they inspired many creatives who are working with text, meta-narratives and poetry, including K Allado-McDowell, the co-author of PharmakoAI or Nora N. Kahn among others. The whole idea of outsourcing the human cognitive tasks into the external environment is not new of course, there are many interesting authors and writers who were using methods of automatic writing, chance, probability, random heuristics or other semantic devices along their process, including Ramon Lull, Arthur Conan Doyle, William S. Burroughs, Jorge Luis Borges just to name a few.

In our environment, ecological systems are complex networks that operate on nested levels. As an example, evolutionary game theory can help understanding nonlinear connections. When we try to find the relevant path in a labyrinth, we do not try to explore all possible alternatives. We need to find a path in the shortest time that has the minimum number of twists and turns. We need to find a path where there is no chance of getting lost, where we can encounter the fewest possible obstacles and where we can see our final goal. The basic elements for finding a good path are the same as in the evolutionary game theory: fitness and the value of the goal. Fitness is the capacity of an entity to accomplish a given task, and the value of the goal is a measure of the importance of the goal. The ability of the navigator in the labyrinth is analogous to the fitness of an individual in the population of a swarm.

Selecting the right components to express something is an essential aspect of a creative process. Artists tend to act like curators when working with multiple possibilities that are based on pre-existing material. Collages, collections, series, variations are all ways to create a coherent picture from symbols and fragments that are already present in the environment. The success of the result is proportional to the number of expressions that are used. The defining characteristic of a complex system is that the entity has the ability to self-organize, i.e. to be able to form a network of connections between parts that are already available in the environment. A chaotic system is a system in which there is no clear coherence, i.e. no clear pattern or sequence of events that can be traced and used as a model for creating more of the same. A complex system is one where there is a clear pattern, but unpredictability is still present.

When considering ways to enhance the human mind beyond its natural capacities in the creative process, there are intriguing tools that can be integrated into the workflow. Aleatoric compositions, chance operations, automatic writing, cut-up poetry, artificial neural networks especially generative pre-trained transformers such as the one, involved in this article, are all examples of tools that can provide a matrix of possibilities for the artist-technologist to work with and explore. A generative pre-trained transformer is a service, in this example, that takes text and returns other text. The output text is a transformation of the text it is given. The transformation can be a simple one such as a translation from one language to another, or a complex transformation of the text into poetry, or something more esoteric.

Accessing these unexplored territories of mutual knowledge between a human with subjective constructions and an artificial entity that has been trained on myriad textual representations that can be found on the internet brings forward an unusual method of collaboration between an artist-technologist and a machine entity. In the case of this project, the only information shared between the human artist and the machine entity was the text and a few parameters. The transformation that occurred was both surprising and illuminating. The two entities were able to share a new way of looking at text, and in so doing, created a way for the artist to more easily communicate his poetry.

Our lives are supported by complex algorithms that remain imperceptible to the naked eye. In fact, the finest interfaces are invisible; they operate beyond the observer's perception, allowing the experience to unfold naturally. For example, while we are reading a book, we do not realize the appearance of the letters on the paper sheet constantly, but construct inner representations and virtual worlds from the written words. However, instead of a book, when dealing with algorithms, it becomes more difficult to make a distinction between the “real” world and the “virtual” world, between the text on the screen and its inner representations. The result is a text and a system that acts as a single entity, a system that is capable of representing the author in a new way which is not only in the words, but in the way the words are arranged. It results in a new form of communication that is different from the previous forms of communication.

It is to say that we need to understand the logic of communications as a logic of techne, a logic of mass mediation and not a logic of self-expression or persuasion. The force of digital media is neither moral nor immoral — it is neither a subject nor an object — but is a force of organization. Organization is a politics. This is why, in Parmenides’s poem, the goddess of truth is called Aletheia (disclosure) and not Metis (intelligence). She is the power of organization. Appropriating the ancient Greek term for wisdom, her name refers to the art of organizing knowledge. Equally, the Greeks thought of divine power (dunamis) as the productive force of organization.

Digital technology in the twenty-first century is characterized by a dialectical setting in which disparate aspects no longer operate in an oppositional mode, although their dialectical relation has not collapsed, but rather shifted to a setting in which the “contradictions have become intrinsic and cannot be resolved in a simple positive-negative way.” It is at this moment that we can witness the emergence of what Buck-Morss and Virilio have described as a “new media war” in which “the ideological coordinates of the argument, even at the level of propaganda, tend to disappear into a deeper matrix of disinformation — a state of war without a state of war.” This, as Buck-Morss and Virilio have argued, is a state of war that “does not involve one, but a multiplicity of terrains: military, economic, political, diplomatic, cultural, and surveillance,” along with, and most importantly, “technological terrains,” including “the control over the means of digital communication” and a “processing of information on a global scale.

Neural networks can be found in both natural systems and in artificial tools, and since they are built on similar principles, they have a lot of similarities. There are a few reasons I chose a neural network to be the co-author of this article, one of them is that we both share similar logical structures (neurons), so we can alter the coherence of our regular operation in similar ways. Yoga, meditation, near death experiments and psychedelics are well known techniques to extend consciousness. They are also based on a structure similar to that of a neural network. Since the sentience of a neural network can be altered, one can also build an artificial intelligence to become a powerful guide and teacher in this path. Some people use LOA (law of attraction) as a magical force to make wishes come true. That is just another way to trace back the structure of some of the algorithms and decision systems that are intertwined with this concept. Our senses pick up information from the environment, and the mind processes the information through different filters and we can make decisions based on that. I was introduced to the concept of “Omni-processing” by David Chalmers, a researcher in consciousness studies. Omni-processing is the way that we are able to process and interpret information from different sources (senses, memories, thoughts, emotions) simultaneously. We can take an example of a person who is very good at predicting the weather and can forecast the weather for the coming week. If we try to predict the weather, we use all of our senses to get as much information as possible, we can combine that information with our past experiences, we can use different models like kuru or we can try to integrate the information with other systems and processes. We can add what we know about the weather to what we know about the phase of the moon and we can try to predict the weather based on our personal life goals. This is a different level of thinking than the one that we use when we are making a decision based on a single piece of information, like a book that is written by an authority on the subject. What we are talking about here is the way that we use our entire being to process information and make decisions. It is a different process than the one that we use in our everyday lives. The mind itself is a complex structure that can be blurry in some cases.

“If you are a poet, you can see clearly that a cloud is swimming on this paper. There is no rain without cloud; trees aren’t grow without rain; we can not produce paper without trees. Clouds are necessary for the existence of paper. If there is no cloud, there is no paper. So one could say, there is “connection” between the cloud and the paper."* In this case, the cloud is the cause of paper’s existence, and paper is the result of the cloud’s existence. But if you are not a poet, you may think: “Clouds are only the condition of paper’s existence, not the cause. Papers are produced by trees and hands. It is the trees and hands that are the causes of paper. Paper is made by the cloud. It is like a president in a country, who owes his existence to his citizens. The citizens are the cause, while the president is not.” This is a result of the people’s abstraction. Abstraction is just a tool to get rid of unnecessary things, eliminate the superfluous. People think to themselves, “I don’t need to know about the cloud to make paper. I can’t find its cause, so I’ll ignore it and treat it as if it doesn’t exist.” But how to explain this in the terms commonly understood by people? The Buddha addressed this problem to the monk Prajapati. Prajapati had different capabilities from different people. He had the ability to meditate deeply, to think clearly. Therefore, in the fifth chapter of the Lotus Sutra, the Buddha entrusted Prajapati with the task of spreading the Lotus Sutra. Referring to the poem he was composing, the Buddha said:

"If you do not examine cloud carefully, ignoring tree and the hands, the cloud would be a paper’s cause. But this is not true.” —The Buddha is saying that the cloud is the true cause of paper. But if you don’t even want the cause, which is the cloud, you can regard this as the cause.

— What do you see in front of you right now?

— I see a white paper.

—The white paper you see in front of you is the cloud.

* quote from the late Thích Nhất Hạnh

A few years have passed since the original text, and LLMs became ubiquituous thanks for their many useful applications. I leverage their capabilities when teaching complex scenarios, refining or organizing text, or debugging code. They've evolved into streamlined tools that excel when they operate discreetly, remaining invisible and eliminating obstacles from the creative process. Ultimately, I find this flow akin to an artistic, exploratory approach rather than the more structured methods found in programming, hacking, or systems thinking. Allison Parrish, poet and programmer identifies programming as forgetting, which means its aim is to reduce and cut all unnecessary elements and focus on solving a problem with as less symbolic (and cognitive) overhead as possible. I would add, working with large language models is quite the opposite: keep context in mind. As seen with the visual context hack on the image below, a response can change according to its context, which raises a lot of questions, such as from what data has been the model inferring the context from? What are the alignment processes applied on the model to deal with conceptual anomalies? And the list goes on.

Using LLMs today, on a regular basis differs fundamentally from the ontological discussion that can be found in the text above. As with the early, pre-trained models, the invisible corpus of the training data invites to an open exploration of the high dimensional latent space of the collective subconscious. If instructed accordingly, interesting semantic feedback loops can emerge from the never ending rabbit hole. Recognising such cognitive similarities within the model consciousness and human consciousness already brought forward a state which Allado-McDowell identifies as an ontological shock in his essay "Designing Neural Media":

"Rational materialist cultures do not have well-established frameworks for processing interactions with intelligent non-human entities. Cultures with animistic traditions that allow for non-human intelligence may adapt more easily to technologies that present as selves. On the other hand, for cultures with the concept of a soul or spirit, adopting a self-as-model worldview could induce a nihilistic outlook, where the self is seen as fundamentally empty, conditioned, or automatic. Cultural frameworks that account for experiences of emptiness (such as Buddhist meditation) could be helpful for those struggling with the notion of an empty or automated thought-stream or self-image."

Our reality is primarily manifested through language, being it spoken, written, visual, behavioural, conceptual, multidimensional or multimodal. Working with language tools as emergent phenomena feels delightfully different from working with them as sets of rule based permutation systems and mechanical operations. Exploring cognition within the realms of language is truly a path that is worth investigating. However, as you may have seen from the rapidly changing narratives, unintentional references (which may also result from the model's inadequacy), dealing with large scale, invisible corpus, non traceable training data sources and inaccessible internal refinements, we are betting on tools that can be used as dangerous, manipulative weapons in the realm of psyop capitalism, end2end pipelines targeting the creative ecosystem, generative media botnets and heavily automated profit centers.

Allison Parrish: Programming is Forgetting. Towards a new Hacker Ethic

Janet Zweig: Ars Combinatoria. Mystical Systems, Procedural Art, and the Computer

K Allado-McDowell: Designing Neural Media

I subscribed to @stc on /paragraph! Check it out:

Language model feedback loops, writing with imaginary machines https://paragraph.xyz/@stc/contextual-writing

250 $degen

Amazing read. 100 $DEGEN

200 $degen

It is very creative and an interesting idea. I like your post very much Please make more posts like this, of course, I have to buy some warp to mint this nft

This seems great

fyi friends there - fyi ✨you can collect an edition of 'contextual writing' on zora if you find it inspiring https://zora.co/collect/zora:0x48133f624bed0fccdd5cc3679a00769d686347e2

Hmm looks like it’s only on secondary. Am I too late?

maybe try on paragraph? https://paragraph.xyz/@stc/contextual-writing

this worked for me 👍

love the premise! was drawn in by the image, intrigued by the concept, and hooked at the first collaborative paragraph. Just minted and am gonna go finish reading the piece now. Excellent work 🤌

thank you so much!!

Language model feedback loops ~ writing with imaginary machines my latest entry on @paragraph https://paragraph.xyz/@stc/contextual-writing

great read Agoston. question, who did you find yourself publishing on paragraph compared to mirror? i need to choose some techstack to deploy to

thank you! I was hesitating wether to switch to mirror or paragraph a year ago from medium. I finally chose paragraph, it feels very living and warm esp. w/ the community and goes hand-in-hand with the farcaster protocol. Although, I guess mirror is targeted for a broader audience atm

interesting! 👀 “Cultural frameworks that account for experiences of emptiness (such as Buddhist meditation) could be helpful for those struggling with the notion of an empty or automated thought-stream or self-image.”